We’ve all seen it—a couple on a date, politicians, friends, or colleagues talking right past each other, trapped in a moment of profound misunderstanding over the meaning of a single word. For me, that moment came when my partner, a New Yorker through and through, told me, a Midwesterner, to take “the next left” while we were driving to my parents’ farm. When we reached the nearest traffic light, I merged into the left lane and started to turn. The next thing I knew, I heard yells of dismay as my partner frantically told me to stay straight and turn left at the intersection after this one.

After considerable confusion, it became apparent that, to her, “the next intersection” meant not the closest intersection, but the following one. I was shocked that anyone could think this. “Next” means, well, NEXT. In the spirit of research and scientific inquiry, I began calling mutual friends from her home community to investigate her interpretation, only to find that they held similar opinions. To me, “next” meant one thing. To her, it meant something different. This one word had quite suddenly become a point of confusion because of internal assumptions about linguistic meaning that neither I nor my partner even knew we had.

So, what broke down? Why did this “error” in communication occur?

From an epistemological standpoint, she and I had different ontologies—different conceptions about the specific nature of being, properties, and relationships—surrounding the meaning of the word “next.”

This kind of ontological confusion also happens between humans and machines. In fact, this very problem of language and meaning is one of the biggest (and most exciting) current challenges in the field of artificial intelligence and human-machine teaming. In short, when I tell a machine to “turn at the next right,” how can I be sure that its “understanding” of next is the same as mine? And how can I teach or give the machine the information and context it needs to lead us to a common ground of understanding?

If that example seems trivial, consider this: When it comes to puzzles of ontology and linguistics in the world of artificial intelligence and machine learning, the big challenges and applications are often shaped much the same as small ones. Teaching a machine that “When I say X, it means this” and “When I say Y, it means that” may be the first step in training artificial intelligence systems to be able to interact with, aid, and learn alongside human participants using a combination of images, spoken or written words, and other inputs and outputs.

A fully-realized, ontologically-savvy machine could work with its human teammate to make complex analyses and recommendations in dynamic, fast-moving moving environments far faster and far more accurately than a human ever could alone. From recognizing what a word means, an AI system in a fighter jet could progress to recognizing enemy aircraft, then provide bespoke tactical recommendations mid-combat based on the particular skill level, performance history, and emotional profile of a pilot. Learning software could automatically adjust lessons and teaching style to its user; a rookie helicopter mechanic could use their computer assistant to help them identify tools or machine parts or even find and diagnose problems. The ultimate goal: to reduce cognitive load in complex tasks—putting as much burden on the machine as possible and dramatically democratizing the ability of normal people to learn faster, perform at a higher level, or handle tasks previously only accessible to those with decades of specialized training.

But first, the machine needs to know what I really mean when I say “next.”

The key to unlocking the enormous potential of human-machine teaming and a new frontier of artificial intelligence, known as symbiotic intelligence, lies in lexically entraining machines to reach a shared ontology of words.

In this blog I will explore further what an ontology is, why creating a shared ontology is so challenging, what it means to lexically entrain, and how my work as an intern with Galois last summer helped me not only better understand these concepts, but worked towards improving a computer co-performer’s capacity to solve real world problems.

Ontological Repair and Lexical Entrainment

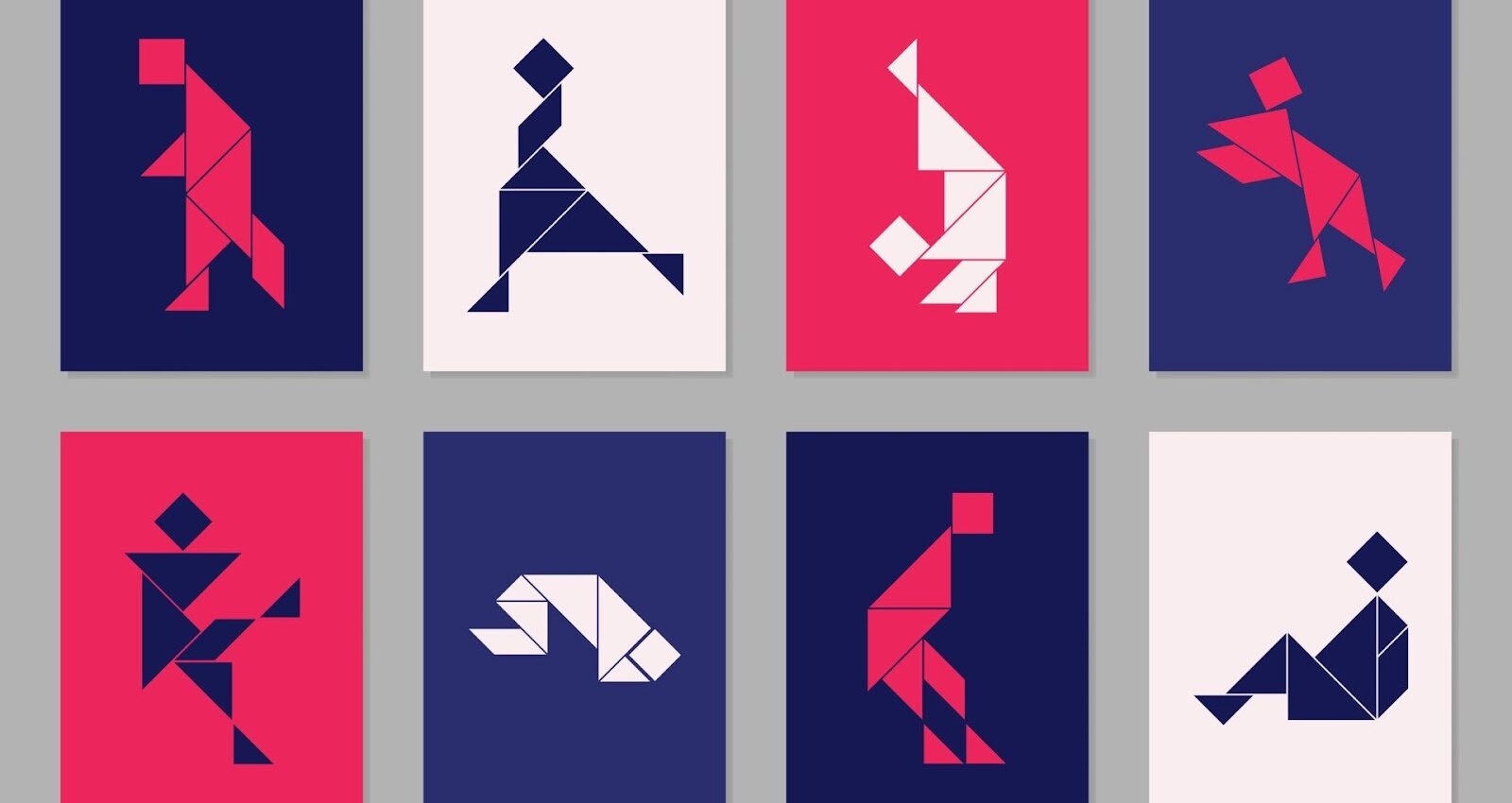

If you have been so fortunate to have never been part of an exchange like the one I related at the beginning of this blog, or even if you have, I’d like to conduct you through a specific example of how we construct and repair ontologies. Imagine that we are playing a game. Look at the tangram images below, and I am going to assign them a hidden order unique from how they are presented. My goal in this game would be to formulate phrases to try to communicate the relative positions of various figures without using ordinals or colors.

I may say something like, “the first one is a man praying.” Now you may look at the figures above and see something that fits perfectly into what you think a praying individual looks like (or perhaps a few), but I would hazard a guess that you and I are thinking of different figures depending on how dissimilar yours and my background are. Someone for whom getting on the ground and prostrating themselves is part of their ritual practices may be inclined to say the second figure from the left in the bottom, whereas someone whose prayers require them to stand and bow may select the figure to the right of it. Someone whose practices are mostly meditation and contemplation may say the first figure on the top, or the last figure on the bottom.

This is just one example how one concept, prayer, can evoke so many different interpretations based on differing backgrounds or predispositions. Now, say that you recognize that our idea of what prayer may look like is different. In other words, you recognize that our existing ontologies are not aligned with regards to the conjured image for prayer. To get us on the same page, you may say something like, “I see a few figures that could be praying. Is yours the one on their hands and knees?” In this case, you are attempting to repair our ontology by proposing additional information that we can add to our shared understanding. While in the case of an example like prayer or praying, these concepts are most likely fixed for a given individual or culture, other, more complex concepts may be more dynamic and require frequent repairs to the shared ontology.

Once we have bounced back and forth, possibly many times, we will hopefully arrive at a set of words that we can both agree uniquely identifies the figure in question. Once we do, we have lexically entrained this phrase to the image. Lexical entrainment is the process of adopting the reference terms and binding them to an object or concept. We do this constantly without even realizing it. Every time you’ve given or been given an instruction and you asked for clarifying details, that was aligning your ontology to lexically entrain a given concept.

Now imagine I hadn’t said “the first one is a man praying” but rather “I see a set of kitchen utensils.” Rightfully, you would likely be very confused. You may make a futile attempt to guess what I could possibly mean by that, but more than likely you’ll flat out say you have no idea what I’m talking about. This is an example of a rejected reference, or an attempt to lexically entrain where one party cannot resolve what is being said. This would result in a new phrase being proposed.

Training Computers as Co-Performers

All together, these examples form a very simplistic set of operations that we can use to think about working with ontologies from a programmatic standpoint: propose, refine, accept, and reject. In my work that I did in conjunction with Dr. Eric Davis at Galois, we looked into how to get a computer co-performer, or a computer that takes over some function or role on a human-based team, to refine and accept a reference phrase. Utilizing phrases from the Stanford repeated reference corpus, we built a pipeline that would extract key information from each phrase. We then formatted the important information into a query which was submitted to the Bing Images API. Taking the top handful of scraped images, they were then processed to be more uniform and tested utilizing the UQI measure to assess how similar the scraped images were. Using this protocol, we were able to achieve better-than-human performance with fewer refinements and less time than the human test subjects.

This is just one step in creating fully functioning ontology-based AI or symbiotic intelligence which will allow for better, more explainable human-computer co-performance, and there is much work left to be done. Once we have a fully ontological model with the capacity to utilize domain expert knowledge, systems will be more open and able to explain predictions. This comes in the form of walking back the ontology, for example, the model could explain that “a figure praying” was too ambiguous, therefore the phrase added “on their hands and knees.”

This sort of explainable reasoning—sussing out exactly how and what knowledge was harvested from existing information to formulate novel knowledge—could be crucial for advancing human-computer co-performance. From our example this may look like the model learning the difference between “on their hands and knees” and “standing,” or learning what a human means when they say that they saw multiple figures in “prayer” for which that distinction was discriminatory. Using these two pieces of information, the model is able to deduce that there is a figure that “praying while standing” would be an accepted lexical entrainment.

These are just a few of capacities that would be afforded to us utilizing ontology based learning. With these, we can construct highly transparent and dynamic models that could ultimately be used for computer in-the-loop technologies capable of rapidly analyzing complex models and data in real time and giving human partners concise recommendations about how to act—for example in crisis response or resource management scenarios. If, in these cases, the expert assesses that the context has changed, they can quickly make minor changes to the ontology which will propagate out to the models full world view, allowing for quick updates to create a large impact on the model’s performance.

I don’t know if adding a computer in-the-loop will save you and your partner from arguing over what is the best way to convey when you should turn, and frankly, I won’t be the one to find out. However, having the capacity to construct ontology-based learning systems will greatly improve programmers’ ability to explore, explain, and exploit domain knowledge in safety critical environments. Over my summer internship, we were only able to scratch the surface on what this technology is capable of, but we are confident in its capacity to change how artificial intelligence is used and thought of in a wide variety of applications, having a great societal impact in the years to come.