This post focuses on Galois’s silicon projects and related research efforts around asynchronous circuit design as we approach the 27th IEEE International Symposium on Asynchronous Circuits and Systems (ASYNC 2021), to be hosted by Galois as a virtual conference September 7–10, 2021.

Since our founding, most of Galois’s R&D projects have focused on software and software capabilities in research areas including formal specification and static analysis (e.g., Cryptol, SAW, and s2n verification for Amazon), testing (e.g., Crux), secure computation and fully homomorphic encryption (e.g., Verona and RAMPARTS), computer security (e.g., ROCKY, MATE, 3DCoP, and ADIDRUS), and machine learning (e.g., ADAPT and PPAML).

Over the past few years, though, we’ve also applied Galois’s strengths in formal specification and verification to silicon, generating formally verified cryptographic circuits from Cryptol specifications in the GULPHAAC, 21st Century Cryptography, and BASALISC projects, and designing systems-on-chip for FPGA and application-specific integrated circuit (ASIC) applications in those projects as well as in SHAVE, BESSPIN, and GLASS-CV.

Galois often does things differently, and our approach to ASIC design in the GULPHAAC, 21st Century Cryptography, GLASS-CV, and BASALISC projects is an example of that. Our silicon design flow uses multiple high-level hardware description languages and formally-verified specifications, and our designs use asynchronous circuits extensively. So what exactly are asynchronous circuits, and why have we fabricated three chips (and started work on a fourth) that feature asynchronous designs?

Synchronous Circuits

In most integrated circuits, a fixed-frequency clock signal is distributed throughout the circuit to keep its components and storage elements synchronized. For example, if the processor in your laptop is clocked at 2 GHz, the clock triggers that synchronization two billion times each second. Circuits that rely on such clock signals are called synchronous. Synchronous designs are the de facto industry standard. Still, they have several inherent problems that make the chip design process extremely complex and can unnecessarily reduce chip performance and increase chip power consumption.

Clock Skew

In a synchronous design, it’s important that the clock signal be distributed properly across all the different parts of a chip because it is used to determine when an output signal generated by one part of the chip can be used as an input signal to another. Clock skew occurs when different parts of the circuit receive the clock signal at different times, which can be caused by the clock signal being carried to different parts of the circuit over wires of different lengths. In the presence of clock skew, one part of the chip might use another’s output before it is ready, with unpredictable results.

For example, imagine that an addition circuit takes two inputs that come from different parts of the chip. To compute the function a + b, inputs a and b must both be ready at the same time, when the clock signal tells the addition circuit to use them; if one of the inputs isn’t ready—perhaps it’s still being computed, or it’s an old value left over from a previous calculation—the result of the addition will be wrong, corrupting the computation.

Worst-Case Design

Each individual component in a synchronous design has a maximum clock frequency that it can support, determined by the complexity of its internal logic. For example, a circuit that performs addition on two 32-bit integers can finish its computation much faster than a circuit that performs multiplication on those same two 32-bit integers, because multiplication is more complex than addition. In addition, many computations are data-dependent, meaning that the time they take depends on their inputs. In addition and multiplication, for example, each result bit is generated not only from the corresponding bits of the two operands but also from other intermediate results, akin to how you would perform addition or multiplication by hand on paper.

When multiple components are connected together on a chip, the overall clock frequency at which the chip can run is determined by the slowest component. Even if the rest of the components on the chip could run faster in isolation, they cannot do so when controlled by a global clock that runs at a safe frequency for the slowest component.

In addition, the maximum speed at which even an isolated synchronous component can run is limited by its worst-case performance, the longest it can possibly take to compute its output, because it must always complete its computation within a fixed clock period. Optimization of data-dependent circuits therefore focuses on the worst-case inputs, resulting in overly complex designs that contain special circuitry to handle various worst-case scenarios.

Some synchronous design techniques work around the fact that the clock frequency is limited by the slowest component by using multiple clock frequencies on a single chip, and deal in special ways with clock domain crossings where parts of the chip having different clock frequencies communicate. Whether a chip has a single global clock or multiple clocks, it is critical to ensure that clock frequencies are chosen such that all components function properly.

Limited Design Space Exploration

The design of an ASIC has two main “phases”: logical design and physical design. Logical design describes the components on the chip, their functionality, and their connections to each other at a high level. Physical design realizes the logical design as a network of transistors of specific sizes, connected to each other using wires with specific layouts and lengths, governed by the (often extraordinarily complex) rules of a specific fabrication process.

Just as a given problem can typically be solved in software using various different algorithms and in any of a multitude of programming languages, the same logical hardware functionality can usually be implemented in many different ways: varying pipeline lengths, different optimized circuits to perform the same operations, different communication patterns among components, etc. Each choice in the logical design changes the possible set of physical designs, and has an impact on the size, performance, and power consumption of the resulting ASIC.

The procedure that is performed to verify that a chip will run correctly at a given clock frequency is called timing closure. Timing closure is performed on the physical design, and is based on properties of the fabrication process: how quickly signals travel across wires, how quickly transistors of specific sizes switch, etc. Any change to the design at either the logical or physical level after timing closure—even seemingly small changes like physically relocating components relative to each other so that the wires that connect them are routed slightly differently—requires timing closure to be done again to avoid the possibility of incorrect results. Timing closure is a complex process that takes significant effort; for large designs, potentially weeks or months of effort by the design teams for all parts of the chip. Since timing must be closed after every design change, the timing closure process acts as a bottleneck that limits exploration of the space of possible designs.

Clock Power Consumption

Every toggle of a clock signal consumes power. In a naïve synchronous design, this power consumption occurs even for parts of the chip that are not being used. For example, clock signals are still sent to an arithmetic unit even when no arithmetic operations are taking place, which is a waste of power. The need for chips to operate in low-power environments (such as on battery power) has led to more complex designs that prevent such waste through clock gating, where clock signals are distributed only to the parts of a chip that need to be active at any given time. However, the increased complexity of clock-gated designs has its costs: increased design time to determine where the clock signal must be gated and when, and increased verification overhead to ensure that the chip operates correctly with clock gating in place.

Effects of Process Modernization

In early integrated circuits, it was straightforward to deal with clock skew and close timing because there were relatively few transistors on a chip, and each transistor switched relatively slowly compared to the time clock signals took to travel on wires between them. The space of possible designs was significantly easier to explore, and the power consumption of clock signals was effectively a non-issue because clock frequencies were so low.

Modern ASIC fabrication technology, on the other hand, fits tens of billions of transistors on a single chip, each of which switches much faster than before because switching speed increases as transistor size decreases. Clock skew is significantly harder to deal with due to higher clock frequencies and wires that are longer relative to transistor sizes, requiring the design of complex clock distribution networks to ensure proper synchronization, and complex clock gating architectures to address power consumption issues. Timing closure for modern synchronous chips requires significant engineering effort and sophisticated design automation tools, especially when dealing with their many clock domain crossings. In addition, the design space for modern ASICs is orders of magnitude larger. This increased complexity in all dimensions is one reason why the verification and debugging of silicon designs now typically takes well over half of their total development time.

Asynchronous Circuits

By contrast, asynchronous circuits do not use a global clock signal for synchronization. Instead, components communicate directly with each other to synchronize their operations locally.

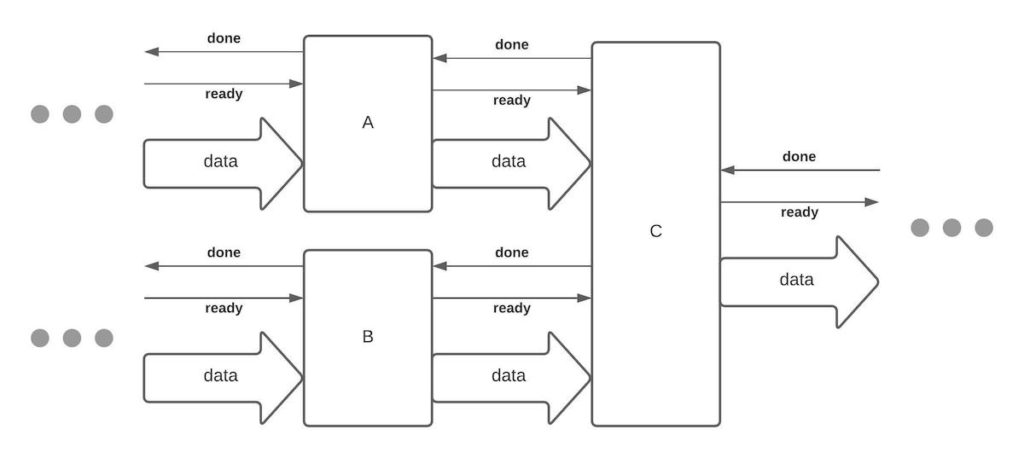

To see an example of how this works, consider the three components A, B, and C in the picture below. A and B each generate an output that feeds into C. When A’s output is valid, it raises (sets to high voltage) an additional handshaking signal (ready) to let C know; B does the same. When C receives those handshaking signals it computes its result using the outputs from A and B and, when it is done, raises its own handshaking signal (done) to tell A and B it has finished using their outputs. When A receives this signal, it lowers (sets to low voltage) its ready signal to let C know that its output is no longer valid; B does the same. When C receives the lowered ready signals, it lowers its done signal to let A and B know that it is waiting for their next outputs. Once A and B have received the lowered done signal the three components are ready to repeat the handshake for the next computational cycle.

A, B, and C perform the same handshaking protocol with any components that feed data to A and B, or that receive data from C. There are other possible handshaking protocols—this particular one is called a four phase handshake—but they all accomplish the same goal: enabling each component to know when its inputs are ready for consumption, and when its outputs have been consumed.

By letting components know when it is safe to carry out their computations, handshaking signals perform the same synchronization task as the global clock in a synchronous design; effectively, they behave as local clock signals that regulate pairwise component interactions. Doing this synchronization locally rather than globally yields several benefits that directly address the synchronous circuit problems highlighted above.

No Clock Skew

Since there is no global clock in an asynchronous design, clock skew is minimized. Asynchronous circuits that obey the same handshaking protocol are compositional, which means that properties about their correctness can be demonstrated (or proven) in isolation and they can then be composed into larger circuits that retain those properties.

Rearranging the physical layout of components in a correct logical asynchronous design has no impact on correctness, though it may have an impact on performance. Similarly, an asynchronous circuit remains correct when replacing a component with another that performs the same computation, even if the new component has a completely different internal structure (because it is faster, or smaller, or has some other desirable property).

The compositionality of asynchronous circuits yields many benefits for design simplicity and assurance, but one of the most important is that by eliminating clock skew it also eliminates the need for timing closure; a correctly-designed asynchronous circuit simply runs as fast as it can given its physical implementation.

Average-Case Design

As noted above, each individual component in a synchronous circuit has a maximum clock frequency that it can support, and the overall clock frequency of the circuit is limited by the slowest such component. In an asynchronous design, because there is no overall clock frequency, the performance impacts of slower components are felt locally rather than globally. Moreover, asynchronous circuit designers can optimize for the average case rather than the worst case execution for any given component.

Unlike a synchronous arithmetic unit, which we’ve already seen can only operate as fast as its worst case inputs can be processed, an asynchronous arithmetic unit can take as much time as it needs—and different amounts of time for different sets of operands—without affecting correctness. Therefore, it can be optimized for its performance on typical inputs rather than worst-case ones. The resulting circuits are both easier to verify and less costly in terms of area and power consumption, and have been shown in practice to have significantly higher performance on typical workloads.

Wider Design Space Exploration

The fact that asynchronous circuits are compositional and don’t require timing closure makes it easy to rapidly prototype a circuit and then explore the space of possible designs for each of its components to optimize the overall circuit for power, performance, area, and other characteristics. It can also significantly reduce verification overhead, because a specific logical arrangement of components can be shown to function correctly and then realized as any of several physical arrangements of specific components that obey the same protocols without invalidating that correctness.

Energy Efficiency and Voltage Scaling

In contrast to synchronous circuits, which (in the absence of clock gating) consume a base amount of power for clock distribution regardless of the computation being performed, asynchronous circuits only consume power when they are actually computing. If a particular asynchronous component, such as an adder or multiplier, is not being used, it does not consume power. This can result in significant power savings, and makes asynchronous design ideal for low-power applications.

Asynchronous circuits implemented using certain design styles can also exhibit another useful characteristic: voltage scaling. For synchronous circuits, the ability to operate correctly at a given clock frequency relies on the fact that the transistors in the circuit are all being powered at a specific, constant voltage. Transistors switch faster and less efficiently at higher voltages, so a change in voltage—for example, lowering the voltage to reduce the power consumption of the circuit so it can run longer in a battery-powered device—causes a change in speed. A typical synchronous circuit with a clock frequency of 2GHz at 0.8V won’t run correctly (and may not run at all) at 0.6V, because the transistors simply won’t switch fast enough to keep up with the clock.

Some synchronous designs pay a penalty in design complexity to carefully coordinate changes in clock frequency and voltage, but asynchronous circuits scale naturally with changes in voltage. At lower voltage they compute more slowly and handshakes happen less frequently, but they still behave correctly, at least until the voltage is lowered to a level below which the transistors do not switch properly, called the threshold voltage. Voltage scaling allows the same asynchronous design to run fast in high power environments and conserve energy at the expense of speed in low power environments. It also allows different parts of the circuit to be powered at different arbitrary voltages, and for voltages to be dynamically scaled within wide ranges while the circuit is running, which can be done strategically to increase the circuit’s performance for specific applications or its resistance to power side-channel attacks (a topic that we’ll return to in a future post about the 21st Century Cryptography project).

Scalability and Technology Migration

As mentioned above, the increasing transistor density in new ASIC fabrication processes has exacerbated the problems of synchronous design over time. Asynchronous designs, however, scale arbitrarily. Compositionality makes it straightforward to design an asynchronous ASIC at the logical level once and realize it as a physical design in multiple fabrication processes. Of course, performance, power, and area improvements can be realized by optimizing an asynchronous design to take advantage of the strengths of each new fabrication process. However, the correct functioning of the design does not depend on such optimizations, which makes migrating asynchronous designs to new technologies easier.

Asynchronous Design in Practice

So, why isn’t everybody doing asynchronous design? Mostly, it’s because the silicon development ecosystem has grown over the last several decades primarily around synchronous design concepts: they’re taught in every university computer engineering program, they’re well supported by all the design automation tools that are essential for building chips in modern fabrication processes, and they have, for the most part, worked up to this point. However, as the complexity of silicon designs has increased exponentially, the benefits of asynchronous design for performance, power consumption, and design and verification effort have become more appealing. Modern synchronous chips, including those in the device you’re using to read this post, increasingly implement parts of their functionality with asynchronous circuits.

One particular consideration that is often cited as a drawback to asynchronous design is its silicon area overhead, as it is generally more expensive to fabricate larger chips. Asynchronous control logic, which replaces clock distribution logic in synchronous circuits, can take considerable area, and using some design styles an asynchronous circuit can easily end up being twice as large as an equivalent synchronous circuit. However, many asynchronous design styles (including the one we predominantly use at Galois) have significantly lower area overheads, and research efforts at multiple institutions are underway to reduce those overheads further. For example, we recently implemented an asynchronous AES cryptographic core with effectively no area overhead over its synchronous counterpart. Moreover, with the increased transistor density and accompanying design complexity inherent in modern fabrication processes, the ability to achieve excellent performance at low power without extraordinary design effort and the verification benefits of compositional design can often outweigh silicon area considerations.

Asynchronous Design at Galois

At Galois, we believe the benefits of asynchronous design significantly outweigh the drawbacks. We’ve seen in practice that the use of asynchronous circuits reduces design and verification effort, provides higher assurance through compositional design and verification techniques and novel reasoning methods, and can increase security by enabling novel side-channel resistance techniques. Continuing a line of research that has been carried out for several years across multiple institutions, we’re also working on tools and techniques to make asynchronous design more approachable by engineers that have been trained primarily in synchronous design, so that its benefits can be realized more broadly.

That’s why we are so excited to share information about the research and development that has gone into our asynchronous design projects, and to host this year’s IEEE International Symposium on Asynchronous Circuits and Systems (ASYNC 2021) as a virtual conference this September. In future posts, we’ll dive into more detail about our projects, their results, and future research directions.